This is the year when AI-powered systems, smarter cloud migration, and agent-led cybersecurity converge, so leaders who invest in control, trust, and execution speed will pull ahead. The key tech trends for 2026 include:

- Agentic AI becomes a new “work layer”: people state an outcome, systems execute.

- The leaders stop obsessing over models and start building AI automation systems: orchestration, evaluation, permissions, monitoring, and audit trails.

- Cloud migration gets more selective: hybrid cloud and workload placement choices will be driven by latency, data location, and inference cost.

- Cybersecurity shifts to “agent-led defense,” while attackers scale prompt attacks and deception. Expect growth in prompt injection and “shadow agents.”

- AI governance becomes board-level hygiene: oversight gaps are already expensive, especially with shadow AI.

- Physical AI (robotics) moves from experiments to real deployments.

- Quantum risk stops being “future-you’s problem”: begin post-quantum cryptography planning now.

- AI legal and policy volatility becomes an operational risk: leaders should assume more lawsuits, more scrutiny, and more variance by jurisdiction, and design their AI program to survive it.

If 2024 was “AI curiosity” and 2025 was “AI pilots everywhere,” 2026 is when the bill comes due in a good way, if you plan well. The most important tech trends of 2026 aren’t about demos. They’re about measurable outcomes: faster decisions, leaner operations, stronger cybersecurity, and calmer nights for leadership teams.

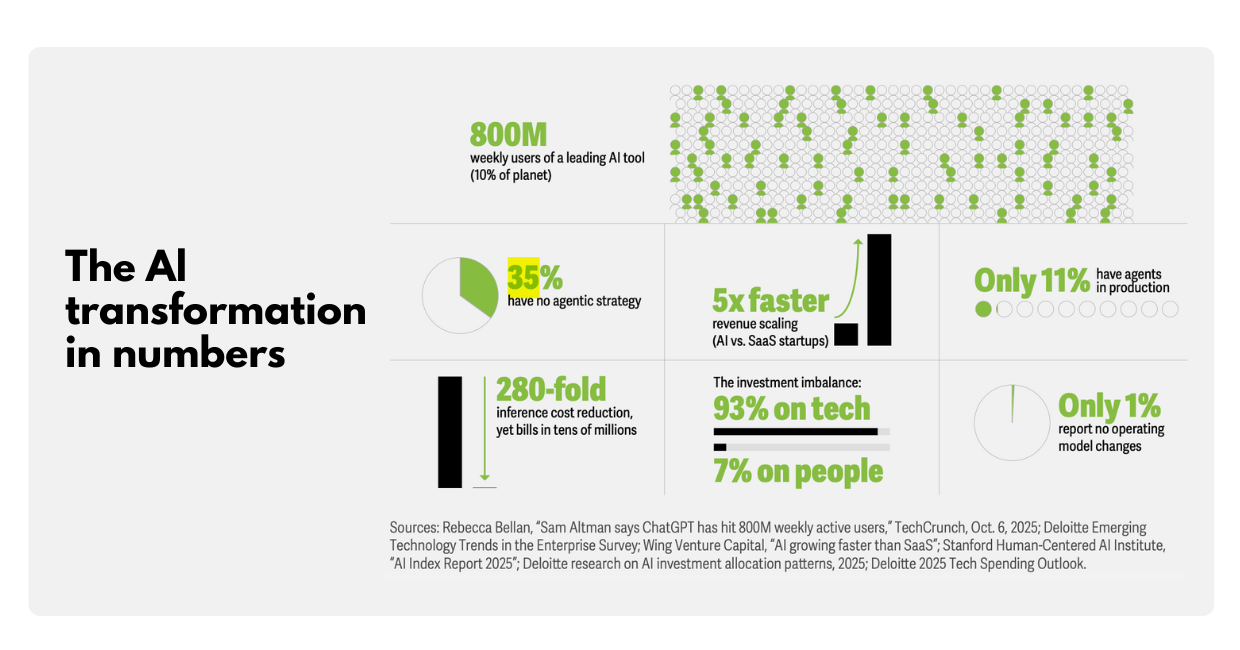

And yes, your competitors are moving. In one survey, 52% of executives at gen-AI-using organizations said they already have AI agents in production.

Below is a practical, leader-friendly guide to what’s likely to matter most in 2026, and what to do about it.

Trend 1: Agentic AI becomes the default way work gets done

Here’s the simplest way to understand agentic AI: instead of asking software to help inside an app, you tell it the outcome you want, and it coordinates tools, data, and steps to get you there (with your approval where it matters). Google describes this as a shift toward intent-based computing, where employees state a desired outcome, and systems determine how to deliver it.

But specs alone don’t tell the full story. To understand why Gemini 3 matters, we need to look at what truly sets it apart from previous Gemini models and competing LLMs.

What changes in 2026

- AI agents move from “assistant” to “doer,” especially for multi-step tasks.

- More agent ecosystems go from pilots to production (especially in regulated spaces like financial services, where audit chains matter).

- Customers start expecting concierge-style experiences, not chatbot scripts, because agents can remember context (when you allow it) and complete actions end-to-end.

- “Agentic commerce” quietly accelerates: more customer-facing assistants won’t just recommend, but also prepare carts, draft purchase orders, schedule delivery, and route approvals (with humans stepping in at the “money moments”).

- Expect buying journeys to shift from “search-compare-checkout” to “ask-verify-approve,” which puts more pressure on product data quality, pricing rules, and fraud controls than on the chatbot UI.

What leaders should ask before scaling

- Where will agents be allowed to take action vs. only recommend?

- What does approval look like at “high-risk moments” (payments, deletions, customer data access)?

- Can we see an audit trail for what the agent did and why?

- If an agent can buy, book, or pay: what are the guardrails (spend limits, vendor allow-lists, step-up auth, and “two-person rule” approvals)?

- If an agent is customer-facing: what’s the escalation path when it’s unsure, and how do you prevent confident-but-wrong recommendations from becoming refunds, chargebacks, or reputational risk?

In 2026, agentic AI stops being a side project. It becomes a productivity baseline, and your advantage comes from how safely and reliably you operationalize it.

Trend 2: “AI-first” shifts from tools to operating model

Many teams got stuck in 2025 with “AI features” that were impressive but hard to trust. In 2026, the focus moves to systems: permissions, high-quality data, monitoring, and repeatable workflows that deliver ROI. Agents are a leap from being an “add-on” to an AI-first process, requiring a shift in mindset and corporate culture.

The big moves you’ll see this year:

1) From “vibe coding” to governed delivery

IBM researchers describe an emerging approach where users define goals and validate progress while agents execute, requesting approval at key checkpoints.

2) Teams of agents, not one super-bot

Expect the rise of “agents talking to other agents” and orchestrated teams that coordinate workflows across departments.

3) Smarter data beats bigger models

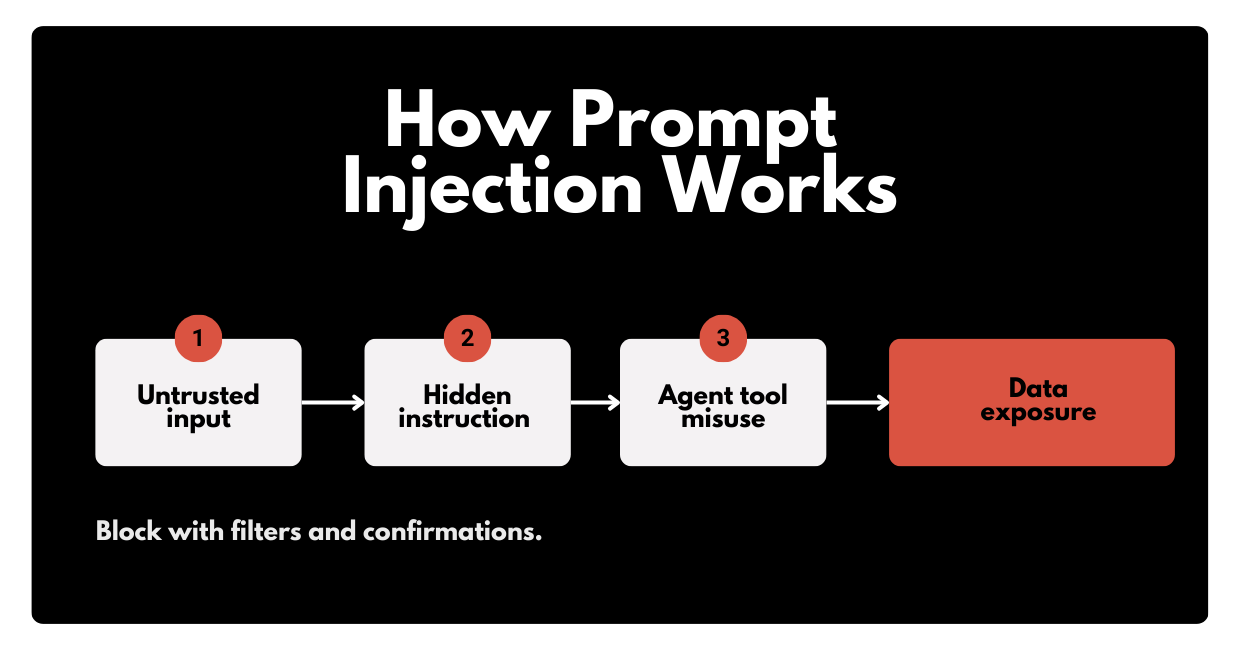

Leaders increasingly prioritize permission-aware, structured enterprise data to produce trustworthy answers, especially as production risk from prompt injection grows.

4) AI sovereignty becomes strategic

Nearly 93% of executives surveyed said factoring AI sovereignty into business strategy will be a must in 2026, driven by dependence, regional compute concentration concerns, and data/IP risks.

5) More Western products will run on top of models built outside the US.

As open-weight LLMs improve (and more of them come from other countries), some teams will choose models based on performance-per-dollar and deployability, even if the vendor ecosystem (and geopolitical risk) looks different than what legal teams are used to.

Practical takeaways for execs:

- Build an “agent operating model”: ownership, approval rules, monitoring, and incident response.

- Treat permissions as product features (least privilege isn’t just for IT anymore).

- Put cost management (FinOps-style discipline) around agent usage and inference spend.

- Add “model supply-chain due diligence” to your standard rollout checklist: license review, provenance, security testing, red-teaming for prompt-injection/tool misuse, and a fallback plan if you need to swap models.

- Assume model choice becomes more dynamic in 2026: teams will mix closed and open-weight options across workloads and optimize for risk and cost, not hype.

In 2026, the winners won’t be the teams with the most AI tools. The winners will be the teams with the cleanest, safest execution system for AI automation.

Trend 3: Cloud strategy gets sharper (and more hybrid)

Let’s retire the old debate: “Everything goes to cloud” vs. “Bring it all back.” The real 2026 conversation is workload placement based on cost, performance, data sensitivity, and resilience.

Research shows that data is often spread across multiple environments, and breaches involving data distributed across environments can be more expensive and take longer to contain.

What’s changing in 2026:

- Cloud migration decisions become more granular: some workloads move, others modernize in place.

- Hybrid cloud becomes the default for many enterprises because AI workloads stress latency, data locality, and cost predictability.

- Security strategy must assume mixed environments, not a single perimeter.

- More teams run “split inference”: sensitive context and retrieval stay close to data, while less sensitive tasks burst to cheaper compute, so your identity, logging, and routing decisions matter as much as your cloud provider choice.

Two “cloud” risks leaders underestimate

- Cloud security drift: misconfigurations and identity sprawl scale quietly until they trigger a serious incident.

- “Shadow AI” + multi-environment data: one unmonitored AI workflow can expose data widely.

- Model and agent sprawl across environments: the more quickly teams swap models and spin up agents, the easier it is to lose track of where prompts, tool calls, and outputs are actually flowing.

Planning cloud migration while rolling out agents? Zazmic can help you design a secure hybrid cloud architecture that supports AI without turning your environment into a maze.

Trend 4: Cybersecurity becomes an agent-vs-agent fight

2026 is when attackers stop scaling only against humans and start going after your agents directly.

Palo Alto Networks predicts a surge in AI agent attacks where adversaries use prompt injection or tool misuse to co-opt trusted agents, turning them into autonomous insiders.

Google Cloud’s Cybersecurity Forecast similarly expects that threat actors will use of AI on a regular basis, including prompt attacks and agentic systems that automate steps across the attack lifecycle.

What leaders should expect in 2026:

1) A big rise in prompt injection

Forecasting is clear: prompt injection is here now, and the expectation is that it will increase throughout 2026.

2) “Shadow agent” risk becomes a board conversation

By 2026, security leaders expect “shadow AI” to escalate into a “shadow agent” challenge: employees deploying powerful agents outside approved controls, creating invisible pipelines for sensitive data.

3) Identity becomes the control plane

Palo Alto Networks frames identity as the primary battleground of the AI economy, including deepfake-driven deception (“CEO doppelganger”) and machine identities outnumbering humans.

4) The SOC shifts from alerts to action

Security teams are already strained: 82% of SOC analysts are concerned they’re missing real threats due to alert volume. The response is the “agentic SOC,” where analysts direct AI agents and receive case-ready summaries instead of raw alerts.

The uncomfortable part: breaches are still expensive

The global average breach cost declined to USD 4.44M, but the US average rose past USD 10M. As of now, 16% of breaches involved attackers using AI (often in phishing and deepfake attacks).

The practical defense tips for 2026:

- Extend zero trust to agents: strong auth, least privilege, continuous verification.

- Implement AI governance + runtime controls (“circuit breakers”/“AI firewall” concepts for risky actions).

- Treat AI assets as an attack surface: models, data, tools, plugins, and agent identities.

- Invest in automation where it measurably reduces breach cost and time.

- Add “tool-call safety” as a first-class security control: validate inputs, limit what tools can do, and require step-up approval when the agent crosses risk thresholds.

One key data point says it all: extensive security AI and automation correlated with USD 1.9M lower breach costs and 80 days faster containment. So, 2026 won’t be “AI vs security.” It’ll be AI-powered offense vs AI-powered defense, so your advantage comes from governance, identity control, and speed.

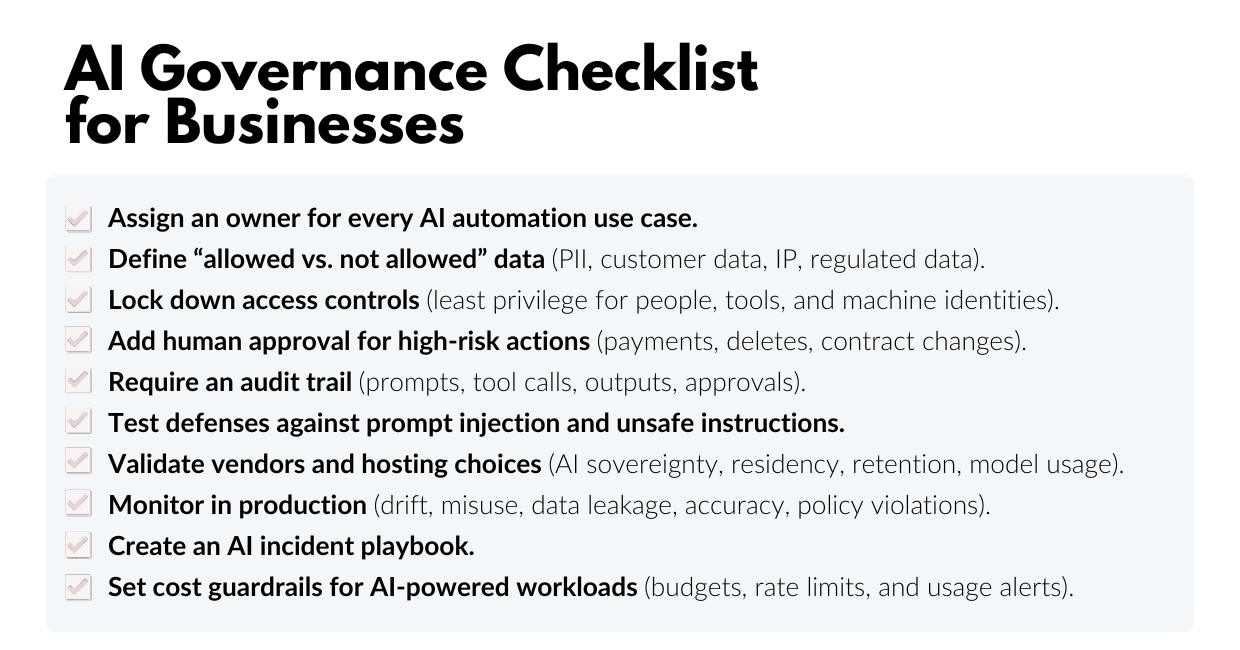

Trend 5: AI governance becomes non-optional

If you only read one section, make it this one.

The “do it now, govern later” approach is already showing consequences. IBM reports that organizations are skipping security and governance in favor of rapid adoption, and that ungoverned systems are more likely to be breached and more costly when they are.

The governance gaps that matter most in 2026:

- Access controls: 97% of AI-related breaches involved AI systems lacking proper access controls.

- Shadow AI: high levels of shadow AI added about USD 670K to breach costs versus low/no shadow AI.

- Approval workflows: many breached organizations lacked AI governance policies or were still developing them.

- Regulatory and legal pressure intensifies: even if national rules move slowly, states, regulators, and courts can still shape what “reasonable” AI controls look like, especially for consumer-facing assistants and high-impact decisions.

- Liability questions get practical: if an assistant gives harmful guidance, invents facts, or nudges a user toward risky actions, your “we didn’t mean it” posture won’t matter nearly as much as your logs, guardrails, testing evidence, and escalation design.

What good AI governance looks like (without slowing the business):

- A lightweight intake and approval process for new agents/use cases.

- Clear data rules: what can/can’t be used in prompts and tool calls.

- Monitoring for model drift, misuse, and unexpected actions (with audit trails).

- Incident response plans that include AI-specific vectors like prompt injection and supply chain compromise.

- Clear accountability for customer-facing agents: who owns the outcomes, who approves policy changes, and how fast you can disable risky behaviors.

- “Receipts” for safety: documented evaluations, red-team results, and a defensible definition of high-risk actions (so you can show due diligence when a regulator, auditor, insurer, or plaintiff’s lawyer comes knocking).

AI governance is not routine paperwork you can put at the end of your to-do list. It’s how you scale AI automation safely, keep regulators comfortable, and protect your IP.

Trend 6: Physical AI and robotics cross the “useful at scale” line

“Physical AI” is the bridge between AI and the real world: robots and systems that perceive, plan, and act in physical environments.

Deloitte points to an inflection point where physical AI–driven robots can move from niche to more mainstream adoption, if organizations solve technical, operational, and regulatory challenges.

What you’ll see more of in 2026:

- Robotics in controlled environments (factories, warehouses, structured logistics).

- More simulation-driven training (and more focus on closing the simulation-to-reality gap).

- Safety and “real-time” constraints are becoming the deciding factor (a one-second delay is fine for chat; it’s not fine for a moving robot).

Physical AI will create real operational wins in 2026, but only for leaders who treat safety, reliability, and governance as first-class requirements.

Trend 7: Quantum readiness moves onto the roadmap

You don’t need a quantum lab to be affected by quantum timelines. You need a plan for encryption modernization.

Deloitte highlights quantum security as an emerging frontier and recommends preparing for the transition as quantum capabilities threaten current encryption methods. IBM also lists quantum security tools among the factors associated with reduced breach costs.

What leaders should do in 2026:

- Start inventorying cryptography now (where keys live, what protocols you rely on).

- Build a phased post-quantum cryptography migration plan.

- Align vendors and critical partners early (supply chains are part of your risk).

Quantum readiness is a multi-year migration, so 2026 is a good year to start the process.

What smart leaders do now: a 90-day priority list

Here’s a practical plan that doesn’t require predicting the future perfectly (because nobody can). What you can do is start preparing your business for the future of tech because the speed of adaptation matters more than the certainty of prediction.

Days 1–30: Get control of what already exists

- Inventory current AI agents (and shadow usage) across teams.

- Define data boundaries (what’s safe for prompts, tools, and external APIs).

- Pick 2-3 high-value workflows for AI automation with clear ROI metrics.

- Inventory model dependencies: which teams are using which providers/models (including open-weight), and where those weights run.

Days 31–60: Build the safety rails

- Implement baseline AI governance: approvals, logging, audit trails.

- Extend zero-trust principles to agents and tool access.

- Strengthen cloud security posture across multi-environment data paths.

- Add customer-facing “harm controls”: escalation to humans, refusal rules for risky requests, and monitoring for defamation-style errors and unsafe advice patterns.

Days 61–90: Scale what works (and only what works)

- Move one agent workflow into production with monitoring and incident runbooks.

- Stand up “agentic SOC” capabilities where possible (alert triage and case summaries).

- Draft your cloud migration placement rules for AI workloads (where/why workloads run).

- Run a “swap test”: prove you can replace one model with another (or move it between environments) without breaking governance, logging, or security controls because vendor and policy shifts will happen.

This year, winners will be the companies that build for execution. If your AI agents can act safely, your AI governance is clear, your cloud migration plan is intentional, and your cybersecurity is ready for AI-driven threats, you’ll move faster without creating new risk.

Turn Trends Into Your Competitive Edge

By 2026, the advantage won’t come from having “more AI.” It’ll come from running a tighter system: AI agents that can execute real work, AI governance that keeps actions and data use under control, cloud migration choices that balance speed with cost and resilience, and cybersecurity that’s built for an agent-heavy world. And as model ecosystems globalize and legal scrutiny sharpens, “tight systems” will increasingly mean: know your model supply chain, prove your controls, and design agents that are safe to transact, not just fun to chat with.

The leaders who will treat these shifts as one connected strategy, not separate initiatives owned by different teams, will define the future and win the markets. Get the foundations right, and you’ll move faster with less risk (and far fewer surprises).

Want a second set of eyes on your tech strategy? Zazmic will pressure-test your 2026 plan, covering AI automation, cloud migration, and cybersecurity controls in one strategy session.

FAQ

What are the top tech trends 2026 for businesses?

The biggest tech trends 2026 cluster around production-grade agentic AI, selective cloud migration and hybrid cloud strategies, and AI-powered security operations that respond at machine speed.

Are AI agents different from chatbots?

Yes. AI agents can take actions through tools (apps, APIs, workflows) and complete multi-step tasks with an audit trail, while chatbots mainly answer questions.

What’s the biggest security risk with agentic AI?

There are two key threats: prompt injection (tricking the model into unsafe actions) and “shadow agent” deployments (unsanctioned agents moving data outside visibility).

How does AI governance help ROI?

It reduces rework, prevents data leakage, improves trust, and makes scaling possible. IBM’s research ties weak controls and shadow AI to higher breach risk and higher costs, both of which destroy ROI.

Should we move AI workloads during cloud migration, or keep them on-prem?

It depends on latency needs, data residency, steady vs spiky compute demand, and your operating model. Many organizations will land on a hybrid cloud for AI because data and risk are rarely “all in one place.”

What’s the most cost-effective cybersecurity investment for 2026?

Invest where it measurably reduces risk and response time: identity hardening, automation in detection/response, and controls that govern AI assets. Extensive security AI/automation correlates with lower breach costs and faster containment.

Where does Gemini 3 fit into 2026 planning?

Treat models (including Gemini 3) as interchangeable components. Your durable advantage comes from the surrounding system: data permissions, orchestration, evaluation, and AI governance.