As more teams bring AI and automation into their daily operations, one question keeps coming up:

Which platform should we build on?

The answer depends on your team’s skills, the complexity of your workflows, and how much control you want over the system.

At Zazmic, we’ve seen it all—from no-code tools to large-scale enterprise deployments—and we’ve pulled together insights from real-world benchmarks to help you choose wisely.

Here’s how n8n, LangChain/LangGraph, and Google ADK compare in today’s LLM-driven landscape—and when those differences actually matter.

Why Framework Performance Doesn’t Really Matter (But Sometimes Does)

Let’s start with a reality check.

Typical LLM API latencies dominate runtime:

- GPT-4: 2–5 seconds

- Claude 3: 2–4 seconds

- Gemini Pro: 0.8–3 seconds

- Embedding APIs: 0.2–0.5 seconds

- Vector search: 0.1–0.3 seconds

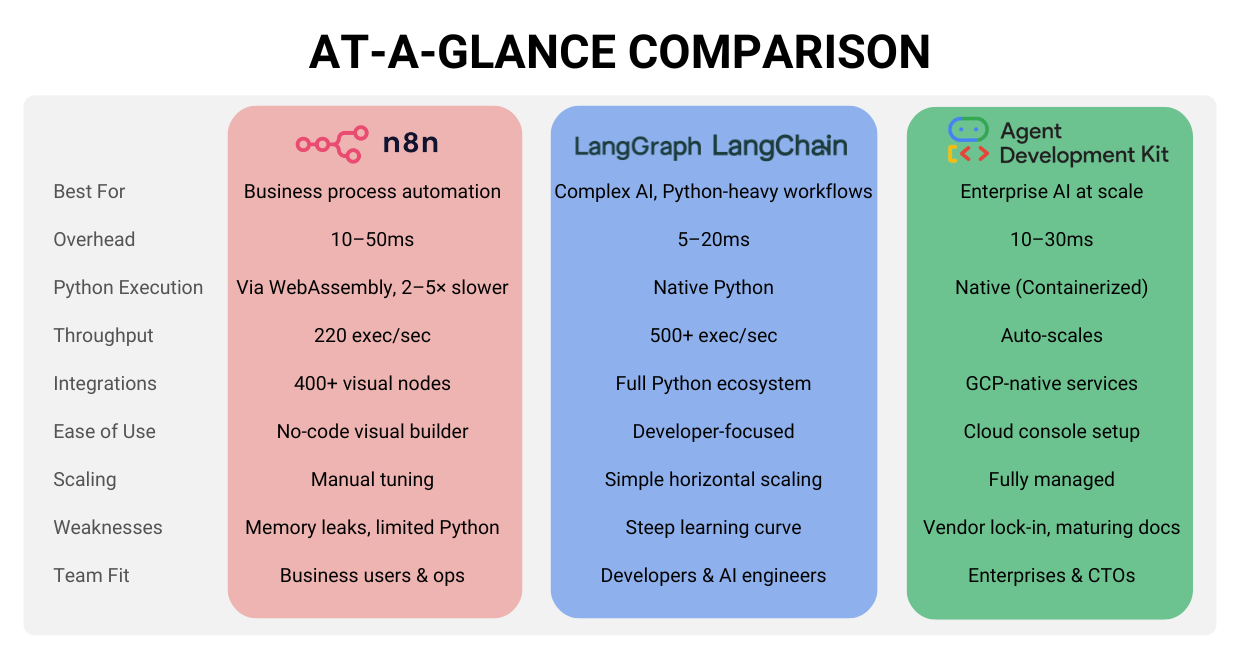

So whether your automation platform adds 10–50 milliseconds or 20–80 milliseconds in framework overhead, the difference represents less than 2% of total execution time.

When Performance Does Make a Difference

There are a few edge cases where framework speed and architecture really matter:

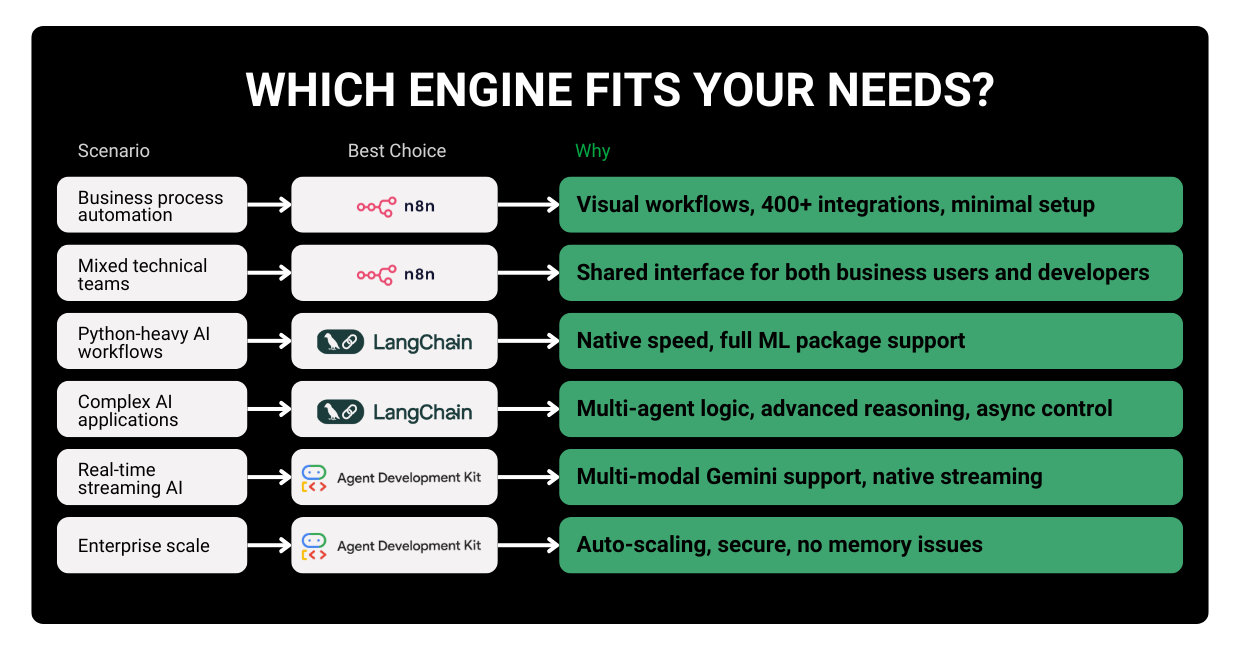

- Python-heavy workflows: n8n can be 2–5× slower and lacks key Python packages; LangChain and ADK run at native speed.

- High-frequency or real-time tasks: LangChain and ADK handle 1000+ executions per second; n8n peaks around 220.

- Simple integrations and business automations: Here, n8n’s visual builder and 400+ prebuilt nodes easily outweigh small delays.

The bottom line:

For 99% of AI workflows, pick the platform that fits your team and goals—not the one that saves a few milliseconds.

n8n — For Fast, Accessible Workflow Automation

n8n is the clear choice for teams that need to automate quickly and without writing code. With over 400 pre-built integrations, a visual workflow builder, and an approachable setup process, it helps businesses move from idea to automation in minutes.

Ideal For:

- Business process automation: connecting CRMs, databases, SaaS tools, or approval flows

- Mixed teams: where developers and business users collaborate

- Rapid prototyping: build and test in hours, not days

Business Highlights:

- Time to first value: 5–30 minutes

- No coding required — drag, drop, and deploy

- Visual debugging and easy iteration

- 400+ SaaS integrations (Salesforce, HubSpot, Slack, Google Sheets, etc.)

Key Limitations:

- Limited Python support (WebAssembly-based and 2–5× slower)

- Memory leaks and scaling issues with large workflows

- TypeScript-only for custom nodes

Verdict:

n8n wins when speed and simplicity matter more than deep AI flexibility. Perfect for operations, marketing, or support automation where ease of use drives adoption.

LangChain / LangGraph — For Complex AI and Python-Heavy Workflows

LangChain and LangGraph are built for the developers and data scientists driving advanced AI applications. They excel in custom, multi-agent, and data-intensive workflows that rely on Python’s vast ecosystem.

Ideal For:

- AI product teams and data engineers

- Complex reasoning or RAG pipelines

- Workflows needing native Python execution and advanced control

Business Highlights:

- Full Python ecosystem — pandas, PyTorch, scikit-learn, async support

- Minimal overhead (5–20ms) and efficient scaling

- Native debugging, tracing, and performance insights via LangSmith

- Capable of 1000+ executions per second

Key Limitations:

- No visual interface (code-first)

- Steeper learning curve for non-developers

- Requires engineering resources to maintain

Verdict:

LangChain wins when you need maximum flexibility and deep AI logic, from RAG systems to multi-agent coordination—where Python-native speed and libraries truly pay off.

LangChain / LangGraph — For Complex AI and Python-Heavy Workflows

When your business runs on Google Cloud, Google’s AI Development Kit (ADK) offers the best combination of scalability, performance, and compliance.

It’s optimized for Gemini models, real-time streaming, and multi-modal workloads, while handling infrastructure automatically under GCP’s managed services.

Ideal For:

- Enterprise-scale AI and real-time data streaming

- Secure, compliant environments

- Integration with Vertex AI, BigQuery, and other GCP tools

Business Highlights:

- Auto-scaling by default — handle thousands of requests per second

- Enterprise-grade reliability and observability

- Optimized for Gemini, giving up to 1–2× faster response in LLM-heavy workloads

- Fully managed runtime with structured logging and cloud debugging

Key Limitations:

- Vendor lock-in (GCP-centric)

- Platform still maturing, limited documentation in some areas

- Higher infrastructure costs at scale

Verdict:

Google ADK wins for mission-critical, real-time, or global-scale AI systems—especially when compliance, uptime, and integration with Google Cloud are key.

Which Engine Fits Your Needs?

Choosing your AI automation framework isn’t just about benchmarks—it’s about aligning tools with your business strategy and team capabilities.

At Zazmic, we help clients navigate this decision with clarity—balancing speed, flexibility, and scalability to fit your goals.

Ready to Find Your Perfect Fit?

Let’s build your AI strategy together.

Schedule a free consultation or discuss your AI goals with our experts today.