Gemini 3 is Google’s most advanced multimodal model, built for real-world enterprise use. It combines state-of-the-art reasoning, long-context understanding, native multimodality across text, image, video, and audio, and powerful agentic capabilities. For businesses, it helps teams move from “AI as a helper” to “AI that completes workflows,” from diagnostics to coding to planning. It’s now available in Gemini Enterprise and Vertex AI and powers new agentic experiences across Google Workspace and developer tools.

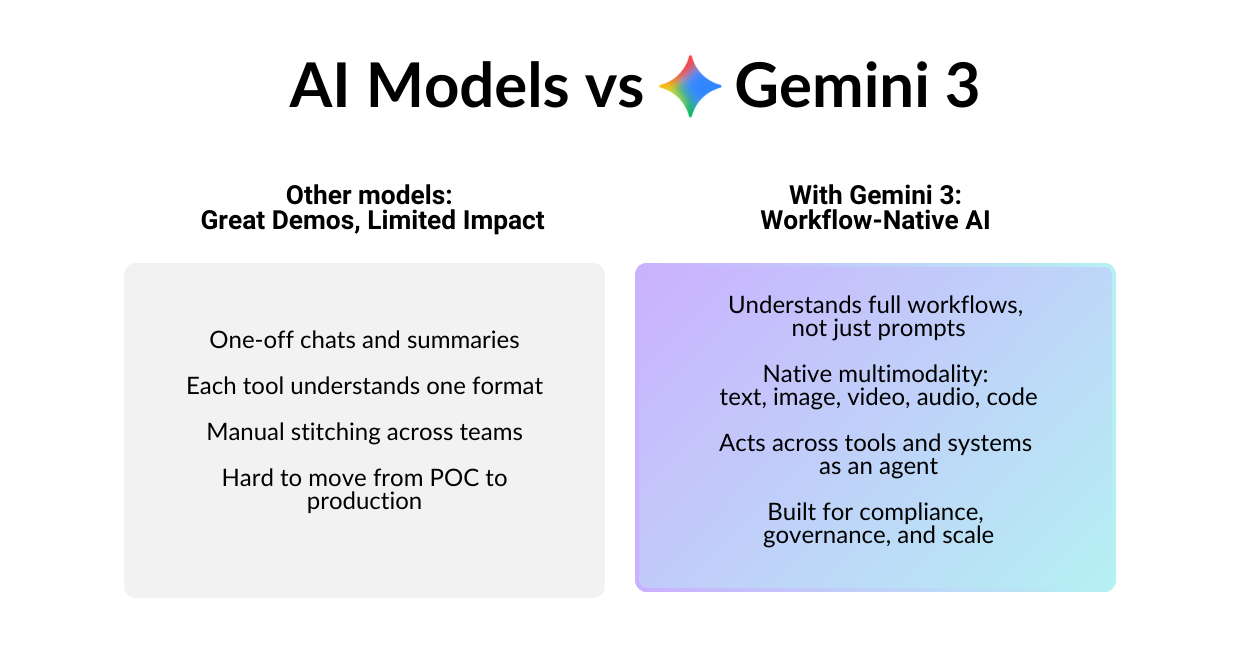

If you’re leading an AI initiative right now, you’ve probably hit the same wall as everyone else: great demos, underwhelming impact. Models can summarize documents, chat about ideas, or draft an email, but they struggle when you ask them to work the way your business actually operates: across tools, formats, teams, and long-running processes.

Gemini 3 fills that gap between AI’s promised and proven value. It’s Google’s latest flagship model, designed not just to answer questions, but to understand full workflows, connect text with visuals, code, and audio, and then help your teams make better decisions, faster.

In this article, we’ll walk through what Gemini 3 really is, what makes it different from previous Gemini models, and most importantly, how it can translate into concrete outcomes for your sales, product, data, and engineering teams.

What Is Gemini 3? A New Standard for Enterprise-Ready Multimodality

Gemini 3 is Google’s latest flagship model built for advanced reasoning, multimodal understanding, and agentic AI. It’s already available in Gemini Enterprise, Vertex AI, and Google AI Studio.

Unlike previous generations, Gemini 3 is designed from the ground up for real-world enterprise use cases: complex data, long documents, mixed media, and workflows that span multiple teams and tools. In other words, it’s not just “the next version,” it’s a new baseline for what you can expect from enterprise AI.

But specs alone don’t tell the full story. To understand why Gemini 3 matters, we need to look at what truly sets it apart from previous Gemini models and competing LLMs.

What makes Gemini 3 So Different?

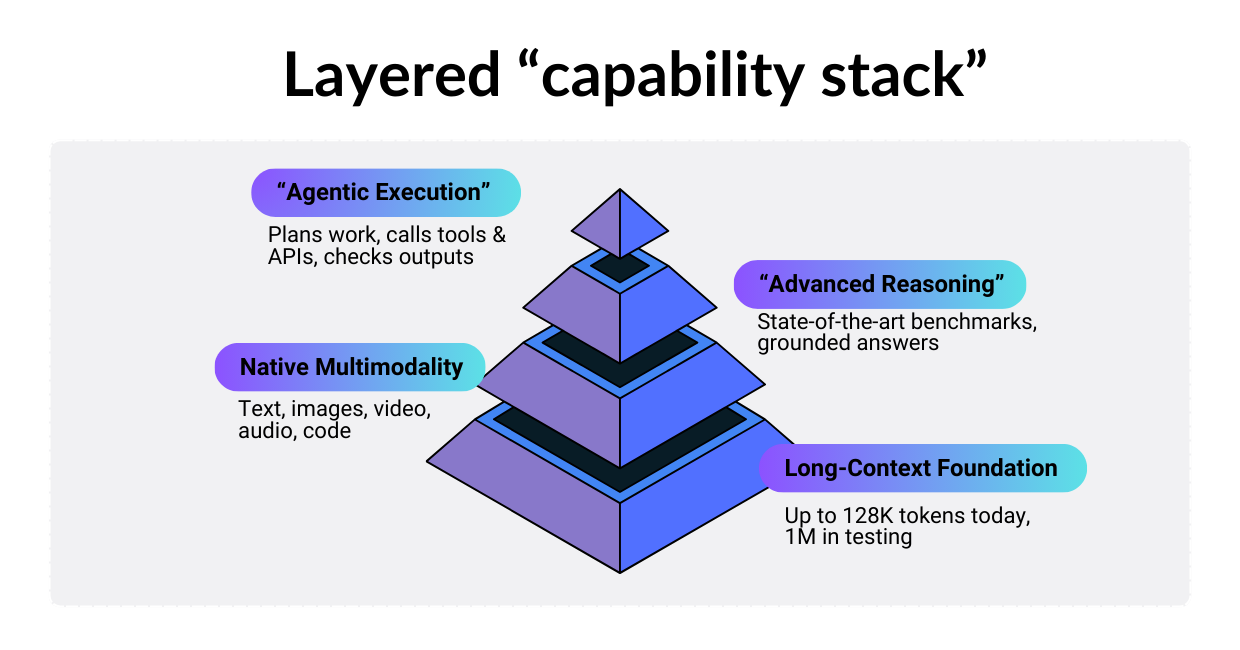

Most AI models are perfect for specific tasks: they’re good at text, decent with images, or surprisingly capable with code. But they rarely excel at everything simultaneously, and even more rarely can they connect these capabilities into a unified understanding. Gemini 3 breaks that pattern entirely with the following features:

- State-of-the-art reasoning that outperforms 2.5 Pro across every major benchmark, from GPQA to Video-MMMU.

- The strongest multimodal performance Google has ever released (text, images, video, audio, code).

- Expanded context window capacity (128K today, 1M in testing).

- The most powerful capabilities for agentic coding and long-term planning.

In other words, this isn’t a model that simply answers your question. Gemini 3 understands entire workflows and complex business contexts. So how does this translate into tangible business outcomes? Let’s break down exactly what Gemini 3 brings to enterprise teams.

How Gemini 3 Helps Businesses: Multimodality for Real Outcomes

The real test of any enterprise AI isn’t what it can do in a demo, but whether it can handle the messy, multi-format reality of how your teams actually work. Gemini 3 was built precisely for that complexity. Here’s how it delivers measurable value across five critical dimensions.

1. Native Multimodal Understanding Across Text, Images, Video & Audio

Earlier models could technically “accept” different file types, but in practice, they treated each one in isolation. Gemini 3 does something more useful. It can look at the numbers in a spreadsheet, the layout in a product screenshot, the flow of a video demo, and the conversation in a meeting recording and treat them as one coherent story.

For a product team, that means you can drop in a short screen recording, a handful of user screenshots, and your release notes, and Gemini 3 can walk through the changes, tie specific user complaints to specific UI or logic issues, and turn that into a clean list of tickets. Instead of someone manually hunting through drive folders, Slack threads, and bug reports, you get a unified, prioritized view of what’s going wrong and why.

2. 128K Context Window for Complete Document & Media Understanding

Most AI tools still force you to feed information in pieces. Gemini 3 is designed to work with the full picture. It can take long contracts, deep email threads, reports, logs, and even media transcripts as one continuous context, and then reason over all of it.

- A legal team, for example, doesn’t have to choose between “just the main agreement” or “just the side letters.” They can include the 50-page contract, the back-and-forth emails, and a summary of prior deals, and ask Gemini 3 to surface non-standard clauses, conflicting terms, and items that require escalation.

- A data or SRE team can send months of logs alongside a short screen recording of a failure and ask where patterns line up and what’s likely causing the issue.

Because the model is working with the complete story instead of disconnected fragments, you get fewer context misses, faster reviews, and more confidence in the outcomes. In areas like compliance, vendor negotiations, and incident response, that often translates directly into lower risk, less rework, and shorter time-to-resolution.

3. Advanced Reasoning for Higher Accuracy and Better Decisions

While many AI tools can summarize, far fewer can actually reason at a level you’d trust with important decisions. Gemini 3 is designed to close that gap. Independent evaluations show it consistently ranks among the top models for reasoning and factual reliability.

In practical terms, it means you can lean on Gemini 3 for work that goes beyond “tell me what’s in this document” and into “tell me what this means and what we should do.” The model is better at staying grounded in the evidence you provide, aligning its answers with that context, and avoiding the kind of confident but incorrect output that erodes trust.

In day-to-day use, that translates into insights that are:

- more grounded in your actual data

- more consistent from one query to the next

- less prone to hallucinations

- structured as clear, actionable recommendations.

This shift from descriptive to genuinely prescriptive insight turns AI from a reporting layer into a strategic partner that helps explain why something is happening and what to do about it.

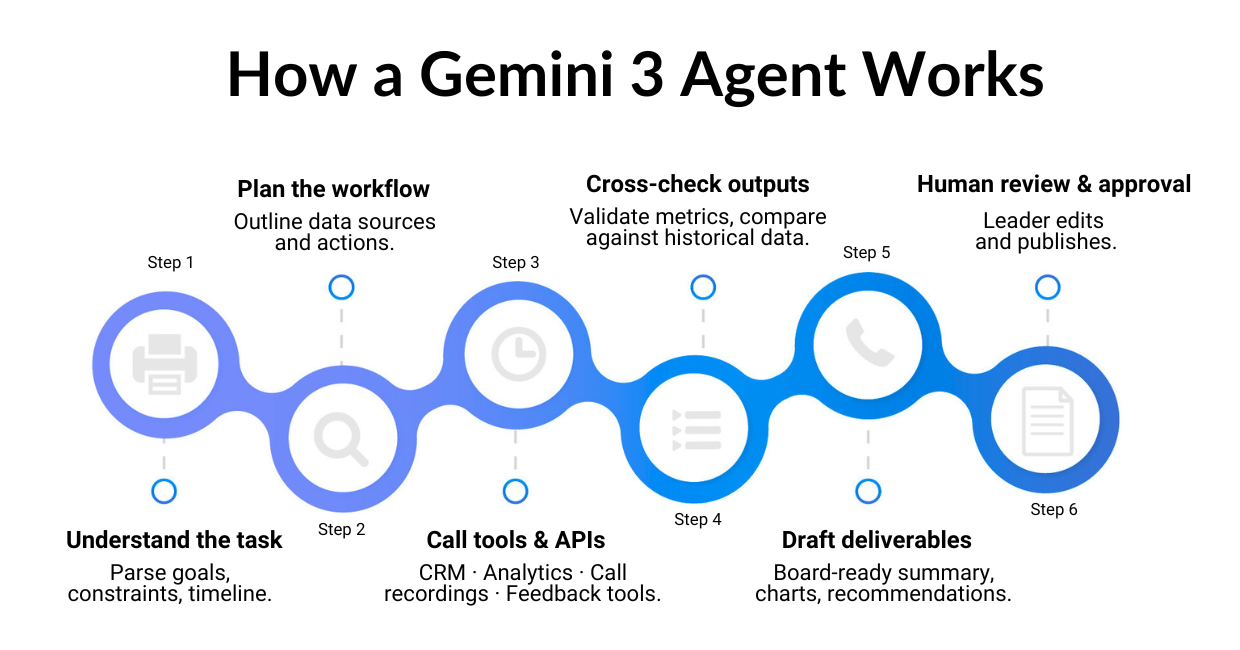

4. The Best Model for Agentic Workflows

Where Gemini 3 really separates itself is in how it behaves as an agent, not just a question-answering tool. Instead of responding to one prompt at a time, it can plan work, call tools and APIs, check its own outputs, and act across your existing systems.

Now, you can give an agent a task like this:

“Review our Q4 pipeline, analyze the demo recordings, check churn feedback, and prepare a board-ready summary with recommendations.”

Gemini 3 can actually do all the steps, not just answer the last sentence. These capabilities are fully available to teams through Gemini Enterprise, Google’s platform to create, run, and manage advanced AI agents.

5. Stronger Coding, Debugging & Front-End Generation

On the engineering side, Gemini 3 moves beyond “autocomplete” and starts to behave like a capable co-developer. It can understand your codebase, documentation, and logs together, then help you design, implement, and debug features with much less friction.

Practically, this means:

- You can generate fully functional UI layouts from a single prompt

- Refactor entire modules in one go

- Debug issues by providing code, screenshots, and logs

- Build custom agents using Google Antigravity

Now, Gemini 3 is not just “good at coding.” It helps teams ship cleaner code faster, reduce time spent debugging, and prototype interfaces and internal tools in hours instead of weeks. At the same time, engineers stay in control, reviewing and guiding the AI rather than starting from a blank file.

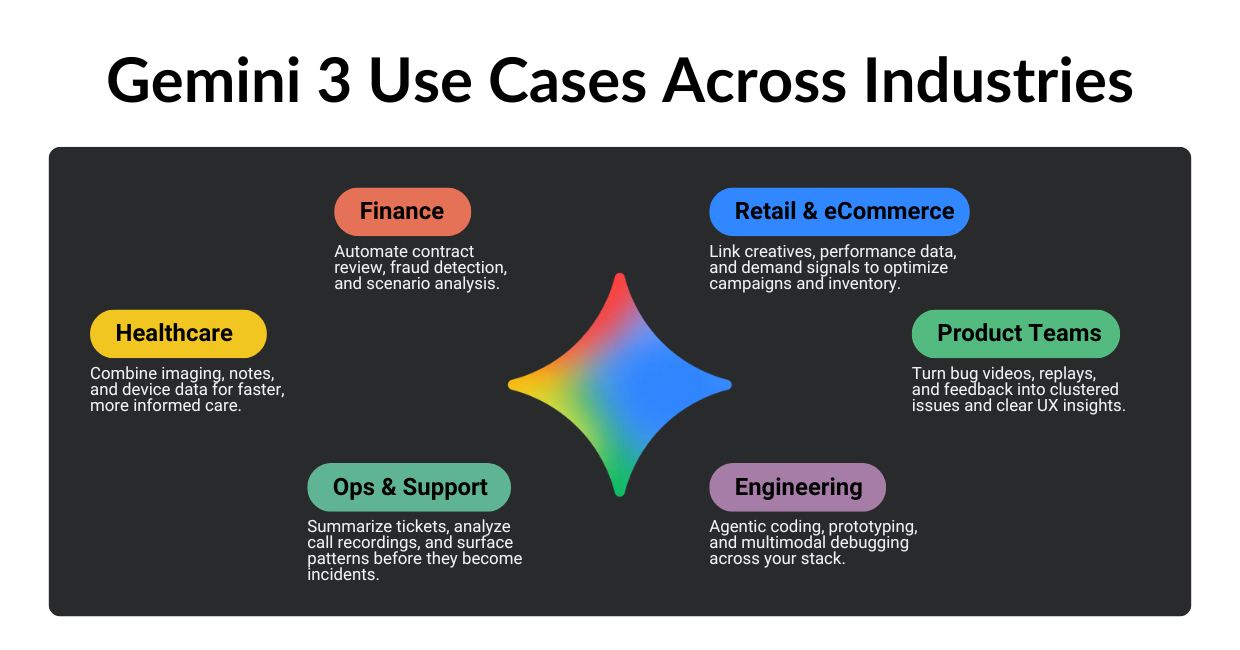

Where Gemini 3 Fits Into Your Workflow

Gemini 3 is not limited to one vertical or team. Anywhere your organization works with complex, mixed-format information, the model can help unify that data, reason over it, and drive more confident decisions.

Here’s how teams across sectors are already putting it to work, and what becomes possible when AI can truly see, reason, and act across your entire operation.

Healthcare

Interpreting imaging and notes together

Combine radiology images, lab results, and clinician notes so Gemini 3 can surface likely diagnoses and recommended follow-up questions for the care team.

Summarizing long patient histories

Condense years of visits, referrals, and test results into a concise clinical snapshot to support faster, more informed decision-making at the point of care.

Spotting anomalies in multimodal data streams

Analyze vitals, device data, and reports together to flag early warning signs that might be missed when systems and formats are siloed.

Finance

Contract review

Ingest full legal agreements, term sheets, and side letters to highlight risk clauses, inconsistencies, and non-standard terms automatically.

Fraud detection from logs and screenshots

Correlate transaction logs with UI screenshots or support recordings to spot suspicious patterns and reproduce user flows that led to anomalies.

Automated financial planning

Pull in budget spreadsheets, market commentary, and scenario assumptions to generate draft forecasts, risk analyses, and “what-if” scenarios for review.

Retail & eCommerce

Performance analysis

Link creative assets with click-through rates, conversion, and returns data to identify which visuals actually drive performance.

Local-language campaigns generation

Create on-brand, localized copy and visuals for new markets, tuned to local preferences and regulations.

Inventory forecasting

Fuse sales history, supplier data, and external signals (seasonality, campaigns, events) to improve demand forecasting and reduce stockouts or overstock.

Product Teams

Video bug triage

Analyze bug repro videos and logs together, cluster similar issues, and propose likely root causes or steps to reproduce.

UX analysis

Synthesize usability test recordings, session replays, and survey responses into clear UX insights and prioritized opportunities.

Release note automation

Turn commit history, Jira tickets, and internal docs into clean, user-friendly release notes tailored for different audiences (end users, sales, support).

Engineering

Agentic coding

Let Gemini 3 act as a coding agent that reads specs, interacts with repos, runs tests, and opens draft PRs for review.

App prototyping

Generate initial service skeletons, front-end components, and integration glue code from product brief documents and design mocks.

Multimodal debugging

Provide stack traces, logs, screenshots, and config files together so the model can trace issues across layers and suggest concrete fixes.

Across industries, the pattern is the same: Gemini 3 helps teams do more with the data and assets they already have with less manual stitching, faster iteration, and more reliable outcomes.

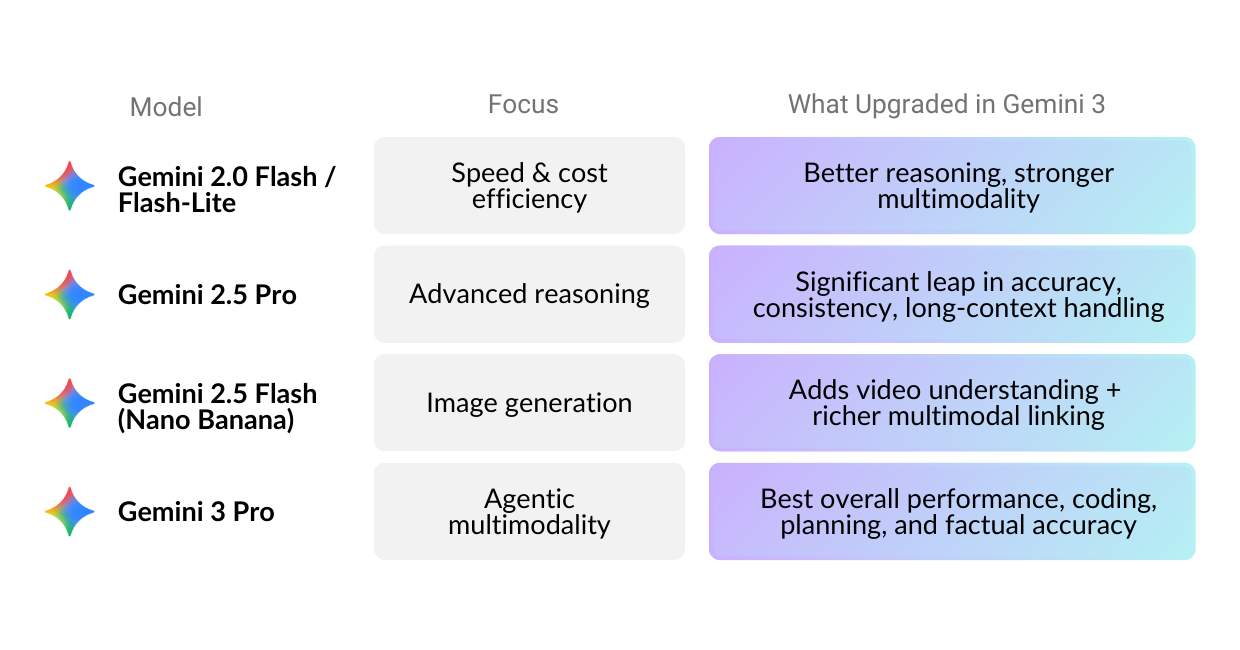

How Gemini 3 Compares to Previous Gemini Models

Google has been iterating rapidly on its AI capabilities, and each Gemini release has brought meaningful improvements. But Gemini 3 represents more than just another step forward. It’s a consolidation of everything Google has learned about building enterprise-ready AI, now available in a single, exceptionally capable model.

Here’s how it stacks up against its predecessors and what’s actually different under the hood.

For teams on any previous Gemini tier, Gemini 3 offers a clear, measurable upgrade: deeper reasoning, more formats understood natively, and better alignment with real enterprise workloads rather than synthetic tests alone.

How Gemini 3 Connects to Other Google AI Tools

Gemini 3 is even more powerful when it’s plugged into the broader Google AI ecosystem. Rather than deploying a standalone model, you can use it as the reasoning core inside tools your teams already rely on.

Gemini Enterprise

Gives your teams access to Gemini 3 inside Workspace, with governance, safety, and agent creation tools.

Veo 3

Works alongside Gemini 3 to generate higher-fidelity videos for marketing, product explainers, training simulations, and multimodal workflows.

Nano Banana Pro

The image-generation model that complements Gemini 3 for creative and vision-heavy tasks.

Together, these tools form a complete AI stack where Gemini 3 handles reasoning and orchestration, while specialized models like Veo 3 and Nano Banana Pro execute creative tasks. For businesses building AI-native operations, this kind of ecosystem integration is the foundation of scalable, reliable automation. And because everything runs on Google’s infrastructure, you get enterprise-grade security, compliance, and support built in from day one.

Ready to Put Gemini 3 to Work?

Gemini 3 marks a turning point in enterprise AI. It’s the first Google model designed to truly understand your entire workflow across text, visuals, audio, code, and long-form information.

The promise of AI has always been to augment human capability, not replace it. Gemini 3 delivers on that promise in ways previous models couldn’t. It sees what your teams see. It reasons through the same complex trade-offs. And most importantly, it acts with the kind of consistency and reliability that enterprise operations demand.

Want to understand how Gemini 3 can work inside your business?

Book a free strategy call with Zazmic, and our AI experts will walk you through real use cases tailored to your industry.

FAQ (SEO + AI Search Optimized)

1 What is Gemini 3?

Gemini 3 is Google’s newest multimodal foundation model with advanced reasoning, long-context processing, and agentic capabilities, available through Gemini Enterprise and Vertex AI.

2. What makes Gemini 3 better than Gemini 2.5?

It outperforms 2.5 in every major benchmark, offers deeper multimodal reasoning, handles longer context windows, and supports more reliable multi-step agentic workflows.

3. Can Gemini 3 analyze videos?

Yes. Gemini 3 has the strongest video understanding in Google’s model lineup, scoring 87.6% on Video-MMMU.

4. Is Gemini 3 available for enterprises today?

Yes. It is available in Vertex AI and Gemini Enterprise, Google’s secure platform for deploying, governing, and scaling AI agents.

5. Does Gemini 3 support coding tasks?

Absolutely. It is Google’s best model for agentic coding, front-end generation, debugging, and long-context code understanding.